Blog/Focaldata AI

3 ways to analyse your 1:1 AI qualitative interviews

Are you really getting all the value you can out of your AI qual datasets? The biggest shift in qualitative research today isn’t speed or automation. It’s volume. With AI moderators now able to run hundreds of one-on-one interviews at once, the scale of qualitative research being generated has exploded.

This creates a new challenge for insight teams: how do you analyse rich, unstructured data at this scale without drowning? Traditional methods – manual coding, sifting through quotes, summarising themes – don’t work when you’ve run a study involving 500 interviews. Either analysis takes too long, or you’re leaving insights on the table.

That’s where having clean, go-to frameworks for analysis comes in. Through extensive testing and trialing (read: putting our work under the noses of our most demanding clients!), the analytics team at Focaldata have identified three powerful frameworks you can now use with your large qual datasets: Laddering Analysis, Topic Modelling and Sentiment Analysis and Generative Recommendations that are bound to impress. These are not as scary as they sound – they’re real, usable ways to get more out of your qual data. Let’s jump in.

1. Laddering Analysis: Digging Into the "Why" at Scale

Qual research has always been about discovering underlying motivations for beliefs and behaviours. A skilled moderator asking the right follow-ups will unveil the chain of reasons behind a particular view.

One of the most enduring frameworks for unpacking these ‘chains of reasoning’ is means-end theory – a model of consumer decision-making developed in the 1980s that links concrete product attributes to emotional consequences and, ultimately, the personal values that shape behaviour. It’s the backbone of classic laddering interviews: start with what someone likes, then keep asking “why?” until you reach what truly matters to them.

Traditionally, this kind of analysis was hard to scale. You needed a highly skilled moderator to elicit the full chain, and an even more experienced qual analyst to reconstruct those motivational ladders from a small set of transcripts. It worked well — but only in slow, one-project-at-a-time qual.

AI changes that. With AI qualitative platforms, laddering now runs automatically, encoded directly in the structure of the interviews — and at scale. The AI moderator doesn’t just ask surface questions; it keeps probing with personalised follow-ups like “Why is that important to you?” or “What does that give you?” to uncover the full path from feature to feeling to value. And instead of doing this 10 times in a focus group setting, it does it 500 times in parallel.

The analysis becomes more straightforward, too. Instead of manually reading transcripts, extracting quotes, and then building ladders, AI helps with tagging and aggregation. In a study on personal care, for example, it might find that 38% of participants in a skincare study linked “natural ingredients” → “skin feels healthy” → “sense of self-care,” while another cluster tied “well-known brand” → “social credibility” → “feeling confident in public.”

This approach doesn't just ‘ladder’ faster — it’s a step-change in what’s possible from a quantification perspective. Because AI can conduct and analyse hundreds of laddering interviews in parallel — with consistent probing and structured tagging — it’s now theoretically possible to capture the full range of reasoning pathways that exist within a population. In other words, you’re getting the richness and nuance of qual, combined with the statistical grounding of quant. You can say not only why people behave a certain way, but how many people think that way, and how those motivational structures vary by audience segment. For the first time, means-end theory has become a truly scalable, population-level framework — not just a conceptual model or a one-off workshop exercise.

2. Topic Modelling and Sentiment Analysis

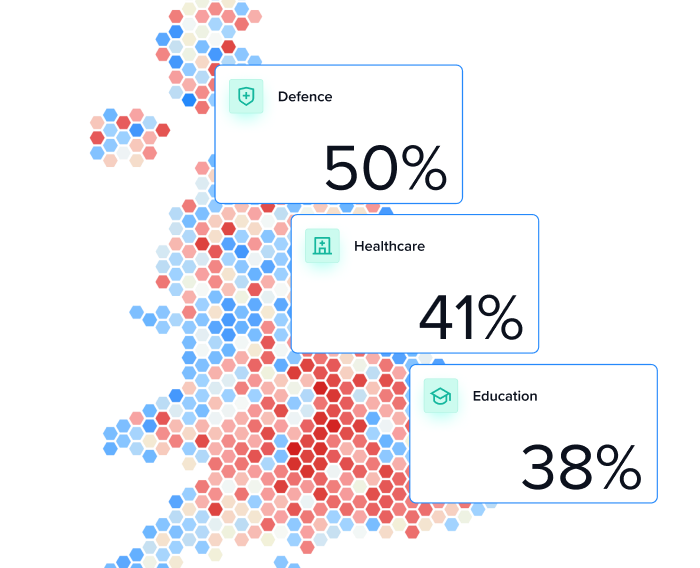

Topic modelling and sentiment analysis isn’t new. But with hundreds of one-on-one interviews running in parallel, you now have access to a level of data and emotional depth that rival social media listening.

The real breakthrough, however, comes when you combine the two core capabilities: topic modelling (what people are talking about) and sentiment analysis (how strongly they feel about it). One without the other gives you an incomplete picture. Just because something is frequently mentioned doesn’t mean it carries emotional weight — and just because something provokes strong feelings doesn’t mean it’s widely felt. To get to 'wow' level insight, you need both salience and sentiment.

Take a look at the plot above. It maps brand attributes by how often they’re mentioned (topic frequency) and how positively they’re discussed (sentiment). The pattern is clear — and, for this particular brand’s marketing team, it matters strategically. Some of the most positively received attributes are barely mentioned (‘hidden strengths’), while one of the most frequently mentioned is among the least valued. That mismatch suggests the brand’s current perception is skewed away from where its value truly lies. For insight teams, the implication is direct: double down on the under-leveraged perceptions, and rethink how you’re communicating the attributes that currently dominate but aren’t driving positive sentiment.

3. Generative Recommendations

Even great qual insight can stall out if it doesn’t translate into action. Traditionally, researchers spend days synthesising interviews into recommendations. By the time the team gets answers, the window of opportunity might have closed.

AI changes the timeline — and the workflow. You can now ask the system questions like “What should we change about this ad?” or “How can we make this campaign clearer?” and get pointed, evidence-based suggestions in minutes.

Example: A charity tests a new fundraising video with AI-led interviews. Instead of manually reviewing transcripts, they ask the platform for optimisations. The AI recommends tightening the call-to-action and elevating an emotional story that resonated.

We’ve seen this in live client work: Edgewell used the platform to test a skincare launch — 50 interviews, insights the next day, and immediate changes to product naming and messaging. The Dairy Farmers of Wisconsin ran 500 interviews to refine sustainability messaging — and discovered entirely new angles they hadn’t considered.

This isn’t just stop-start qualitative research. It’s a continuous feedback engine that helps you optimise content, using millions of feedback points from real participants.